USER GUIDE

Dedoose is an easy-to-use, collaborative, web based application that facilitates all types of research data management and analysis.

Here's what you need to know about how to use it.

Download full User Guide here:

DownloadDownload full User Guide here:

DownloadTraining Center

The Dedoose Training Center is a unique feature designed to assist research teams in building and maintaining inter-rater reliability for both code (the application of codes to excerpts) and code weighting/rating (the application of specified weighting/rating scales associated with code application). Training sessions (‘tests’) are specified based on the coding and rating of an ‘expert’ coder/rater. Creating a training session is as simple as selecting the codes to be included in the ‘test,’ the selection of previously coded/rated excerpts to comprise the test, and then specifying a name and description for the test. ‘Trainees’ access the session and are prompted to apply codes or weights to the set of excerpts making up the session. During the exercise, the trainee is blind to the work that was done by the expert. Upon session completion, results present Cohen’s Kappa coefficient for code application and Pearson’s correlation coefficient for code weighting/ratings overall and for each individual code as indexes of the inter-rater reliability as well as details of where the ‘trainee’ and ‘expert’ agreed or disagreed.

Creating a Training Center Test

Instructions on creating tests greet you when first accessing the Training Center Workspace. To create a new test:

- Click the ‘Create New Test’ button in the lower right corner of the workspace

- Select either ‘Code Application’ or ‘Code Weighting’ (the process is identical for setting up tests and our illustration here will focus on code application tests) and click ‘Next’

- Select the codes to be included in the test and click ‘Next’ - three codes will be used for this example: Reading by Primary Caretaker, Reading by Others, and Letter Recognition.

- After clicking ‘Next’ in step 3, you are then presented with the SuperMegaGrid and prompted to select the excerpts to include in the test. Note that the columns for the codes included in the test are included with ‘true’ or ‘false’ in each row as a cue to locate those excerpts that may be most appropriate for the test. From this view one can filter to particular sets and select the excerpts most useful in the training. After selecting the desired excerpts, click ‘Next’.

- Provide a title and description for the test, click ‘Save,’ and setup is complete.

Tip: It is often most useful to focus the analysis of inter-rater reliability on those codes which are most important to the research questions, that are used on a relative frequent basis, and that are associated with a well-documented set of application criteria (the rules for when the code is most appropriately [or not] applied to a particular excerpt). In the Dedoose Training Center, Cohen’s Kappa is calculated on an 'event' basis, each excerpt being an event and each code either applied or not applied to the excerpt. Thus, if there are many events for which a particular code is not applied (and not appropriate for) there will be frequent agreements between the ‘expert’ and the ‘trainee’ in not applying the code which can disproportionately (and misleadingly) inflate the resulting Cohen’s Kappa coefficient.

Taking a Test

Once a test is saved to the training center test library, a trainee can take the test by clicking the particular test in the list and then clicking the ‘Take this test’ button in the lower right corner of the pop-up.

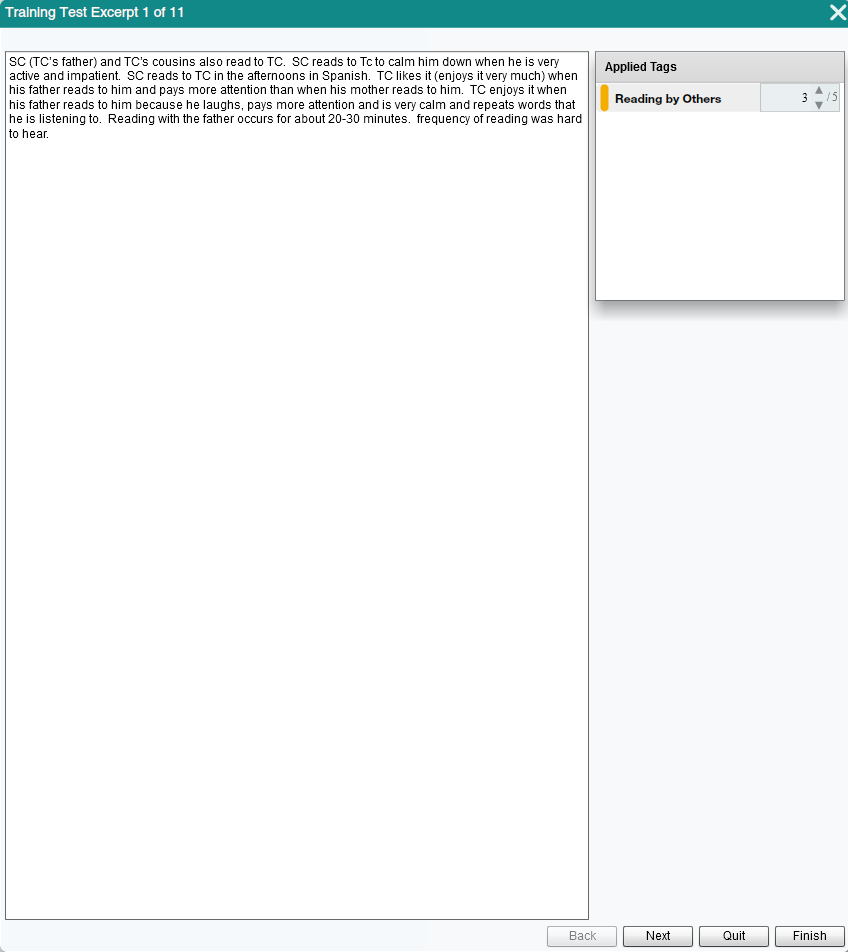

Taking a Code Application Training Test

In a code application test, the trainee is presented with the each excerpt and the codes designated for the test and then expected to apply the appropriate code(s) to each excerpt. They can move back and forth through the test using the ‘Back’ and ‘Next’ buttons until they are finished. Here’s a screenshot of a test excerpt before any codes have been applied:

Upon completion the result of the test are presented, including a Pooled Cohen’s Kappa coefficient and Cohen’s Kappa for each of the codes included in the test, along with documentation and citations for interpreting and reporting these inter-rater reliability results in manuscripts and reports.

Further, by clicking the ‘View Code Applications’ button, teams can review, excerpt by excerpt, how the ‘expert’ and ‘trainee’s’ code applications corresponded. This information is invaluable in developing and documenting code application criteria and in building and maintaining desirable levels of inter-rater reliability across team members.

Taking a Code Weighting/Rating Test

As described above, setting up a code weighting/rating test is identical to that of a code application test, though when selecting the codes to include, only those with code weighting activated will be available. Again, once a test is saved to the training center test library, a trainee can take the test by clicking the particular test in the list and then clicking the ‘Take this test’ button in the lower right corner of the pop-up.

In a code weighting/rating test, the trainee is presented with the each excerpt and all codes that were applied by the ‘expert’ and then expected to adjust the weight on each code to the appropriate level for each excerpt. They can move back and forth through the test using the ‘Back’ and ‘Next’ buttons until they are finished.

Here’s a screenshot of a test excerpt before any weights have been set, those shown are the default value:

Upon completion the result of the test are presented, including:

- An overall (average) Pearson’s correlation coefficient.

- Pearson’s r for each of the codes included in the test.

- Relative difference metrics for each code.

- Documentation and a link to a table of critical values for determining the statistical significance of the result and reporting in articles and reports.

Finally, as with the code application test, clicking the ‘View Code Applications’ button, teams can review, excerpt by excerpt, how the ‘expert’ and ‘trainee’s’ weighting corresponded and can be discussed among the team, toward developing the criteria for and establishing consistency in the code weighting/rating process.

Coding Blind to Other Users

At times you may want to work on the same document as someone else, but don’t want to be influenced by excerpting and coding decisions of others on your team. Or perhaps you want to build your code tree in a collaborative way in context before using the Training Center to more formally test for inter-rater reliability (for more information, see the section on the Training Center). Whatever the reason, here are the steps to what we call ‘coding blind.’ Basically, you are simply turning off the work of others (or removing from view) before you begin your work.

- Enter the Data Set Workspace by clicking the Data Set button in the Main Menu Bar.

- Click the ‘Users’ tab

- Click ‘Deactivate All’

- Click on the username for whose work you wish to view--this will reactivate that person’s work

- Click the main 'Dataset' tab to check the impact of the filter

- The 'Current Data Set' panel on the left side will show you the amount of information that is currently active. The numbers will show you the specifics of how many descriptors, resources, users, codes, and ultimately, excerpts that remain active

- Close the Data Selector pop-up.

To save this (or any) filter:

- Re-enter the Data Set Workspace and while the filter is still active

- Click the ‘Data Set’ tab

- Click ‘Save Current Set’ in the left panel

- Provide a filter a title and, if you wish, enter a description (usually a good idea)

- Hit ‘Submit’ and the filter will be saved in the library at the bottom of the popup.

To reactivate a saved filter:

- Re-enter the Data Set Workspace

- Click the ‘Data Set’ tab

- Click on the filter you wish to load in the bottom panel

- Click ‘Load’ in the lower right corner

- Click ‘OK’

- Close the Data Selector pop-up.

To deactivate a filter:

- Re-enter the Data Set Workspace

- Click the ‘Data Sets’ tab

- Click ‘Clear Current Set’ in the left side panel.

Note: that closing Dedoose will also serve to clear any active filters.

Document Cloning

Beyond the Dedoose Training Center and coding blind strategy, taking advantage of our document cloning feature is one more way you can work with your team toward building inter-rater reliability.

Keep in mind that creating and tagging excerpts involves two important steps:

- Deciding where an excerpt starts and ends

- Deciding which codes should be applied.

There are times when teams want to focus only on the code application decisions. So, Dedoose allows for cloning documents with all excerpts in place. This feature was designed for teams wishing to compare coding decisions on a more ‘apples to apples’ basis. Here's how it works:

- One team member is responsible for creating all excerpts within a document and does so without applying any codes

- Enter Document edit mode by clicking the 'Edit Media' icon in the document view header

- Click the option to 'Clone Document' in the lower portion of the pop-up

- Click 'Yes' to confirm

- Click 'Ok' to acknowledge your understanding that the cloned copy will load

- Click the 'Edit Media' icon a 2nd time

- Provide new title for the copy (we recommend an informative naming convention, ex. Document 1 titleteam user 1 name, Document 1 titleteam user 2 name, ...)

- Repeat the cloning, renaming process so each team member has a copy

- Each team member then applies codes to the excerpts in their own copy

- The results can be exported or compared across documents with multiple computers

- Kappa can also be calculated outside of Dedoose.

This approach has the advantage of direct comparison of coding decision within the context of a document's content.